|

|

Advertisement:

|

|

OCAU Server Upgrade |

|

Join the community - in the OCAU Forums!

|

Software and Testing, Hindsight, Conclusions

The other excellent thing about this 1RU server is that it arrived with a pile of spare bits including a 300W 1RU PSU, an entire spare motherboard identical to the one being used, another 1RU cooler and a box full of 40mm Sunon fans. Some other misc connectors and converters were handy too, but most significant was the inclusion of a 64-bit PCI riser! That was whisked off to be used in pie, so the Adaptec RAID controller was now happily using a full 64-bit PCI slot.

Speaking of pie, thermal load testing had revealed a hot-spot in the top-left corner of the case, over the PCI slots. It wasn't extreme, but the RAID card was getting pretty warm using the highly scientific finger trick: if you can't rest your finger on something for a few seconds, it's more than about 60C, which is too hot. When we moved the Adaptec card to the 64-bit PCI riser this problem was exacerbated because it's a 1RU riser, which has the card lying flat along and only just above the PCI slots. Adaptec don't have heatsinks on any components on this card, so presumably they're not too concerned about heat, but to be safe I mounted one of the 40mm Sunon fans that came with the 1RU server onto a rail running along the middle of the 2RU case. This blows down and under the Adaptec card, which cooled it down a lot even under heavy use. It also helps keep the air moving through that hot spot of the case, past the CPUs and towards the rear exhaust fan.

Private Gigabit:

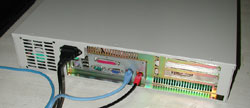

With pie handling the MySQL databases and chips running the Apache webserver, they obviously need to talk to each other almost constantly. For example, when you request a forum thread you will be talking to Apache on chips, which will query MySQL on pie and construct the thread with the data it receives, sending you the completed page. Each server will use the onboard 100Mb Ethernet to talk to the internet and they will be able to see each other through that interface, but it would be better if we could separate that internal, inter-server SQL traffic from the traffic destined for the internet. The 100Mbit Ethernet is in no way going to be stressed, but in terms of system interrupts and from a traffic-monitoring perspective it's better to separate the data. By an excellent coincidence, both motherboards also have onboard Intel Gigabit Ethernet controllers. AUD $7 got us a 1M Cat6 crossover cable from, of all places, Dick Smith Powerhouse. This lets us connect the two GigE controllers directly together and, using non-routable TCP/IP addresses, we can have the servers talk across this insanely-fast link. How fast? How does 100MB/sec sound? Yes, that's a big B, for bytes. I FTP'd a 1.1GB tar.gz archive across the link in 11 seconds. We can easily swamp the IDE drive in chips by FTPing something across this link onto it and watching the transfer speed drop to "only" 45MB/sec. So, for short bursts of inter-server traffic this will be blisteringly fast. In the pictures below, the crossover cable is the black cable in the rightmost ethernet port on both machines.

Once the physical side of things was sorted, all cables neatly tied away, airflow optimised and cooling sorted, it's time to make sure the system will perform as we expect. Of course, it's virtually impossible to simulate the load of 600 people using the forums, another few hundred on the site body, reading reviews etc, while the server handles the background jobs of sending email, responding to DNS requests and other tasks. As with most reviews we need to isolate systems and test them individually, giving us an overall picture of how the system will perform. Certainly on paper these two servers should blow Thor out of the water, but we want to make sure there's no misconfiguration or other problems that will be difficult to fix once the servers are offsite.

Software and Testing:

Slackware is the Linux distribution that AusGamers prefer, and as they will be maintaining these servers we will of course use that. To be honest, due to this policy on the previous server I have been converted from Debian to Slackware and I'm not really looking back. Slackware 9.1 was the latest at the time, and is compatible with the new 2.6.x kernel branch. Actually, when I first set up Ragnarok with the 2.4.x kernel there were issues with drivers for some of the SCSI equipment, but months later in 2.6.x it seems fairly mature. For basic reliability testing both machines were left running for a week, with cron jobs set up to copy files around the machine every hour and Folding@Home running on both CPUs. No unexpected reboots or worrying messages were logged, which is a good sign. Goldmem was also left running overnight on both machines to check the memory, caches etc.

I have full backups of OCAU's databases and various software, so I could test those and make sure they were compatible with the software on the server. It's a little eerie being the only person browsing a copy of the forums in the middle of the day. Using ab, the Apache Benchmark that comes with the server package, I could simulate many hundreds of concurrent thread views, as well as loading OCAU's index page and various other scripts that run the site.

Finally, I got the call from Term at AusGamers to take the servers in to Comindico's datacentre in Sydney. I dropped them off and a few days later Trent put them into the rack to replace Thor, and then he and Jason spent a few hours copying the live data over from Thor and configuring/securing the servers to their standard.

The servers were connected up, we loaded mysql and apache.. and everything worked. So, to summarise, OCAU is now served from Chips, a P4 3.06GHz machine, with the back-end databases handled by Pie, a dual AthlonMP 2800+ box.

Pie and Chips, sending you this page

Hindsight:

As I mentioned earlier, this article covers a process going back to about a year ago. We've been running on the new servers for about 7 months now and, once some minor teething issues were sorted out, their performance has been very good. We were Slashdotted at one point which was a good stress-test and let us tweak the servers under high load. After some reconfiguration the site coped extremely well. We now serve in the order of 10 million page views every month and our record for online forum users has shot up to over 800 concurrent users.

Having said that, there's still some things I would maybe do differently next time or that still need work. Firstly I might consider a more serious RAID setup for the databases or even run an entire machine off a RAID10 array. When you think about it, in terms of write performance we have gone from a single 10k-rpm U160 drive to the equivalent of a single 15k-rpm U320 drive, which isn't really that big a jump. Admittedly we have split up the load significantly - most importantly separating the sequential writes of the apache logfiles from the random forum-database load. We've also dramatically increased the read performance for the databases. However, I have a nagging feeling that the next bottleneck we will hit, even though it will be off in the future sometime, will be the RAID array's write performance. Having said that, since moving to VBulletin 3 in the forums, the load on the RAID array has dropped considerably.

Secondly, we're seeing occasional load spikes on pie, which seem to be coming from the RAID array (because the IOWAIT value reported by "top" spikes up to >95% on both CPUs). My initial research indicated that ReiserFS was the current filesystem of choice and we've used it across both machines. However, several people have told me since that, for database load, ReiserFS may not be ideal - and could be responsible for these load spikes. They're not related to forum load - when we broke the forum record recently there was virtually no IOWAIT reported. But at seemingly random times, pie will slow down with these symptoms, even when not many people are online. It will recover by itself, and pie will recover so quickly when mysqld is manually reloaded that it's usually transparent to forum-goers, but a better long-term solution would be to move to XFS on the array. This requires a kernel recompile so we'll leave it for a while yet and do some more thinking in the meantime. Of course, I could well be totally off track with this thinking so as always I'm open to feedback.

More memory will be a requirement for any future servers. Given the size of the databases and the number of apache processes we want to use, a combined total of at least 4GB will be required.

Finally, although the IDE drive in chips is handling the logging load of Apache fine, when the logfiles become really large we are seeing a few zombified Apache processes which affects performance. These are presumably waiting to write their latest request to the logfile. It only seems to happen when the file is 2GB or more, and if we cycle our logfiles every week as we normally do, we shouldn't see this issue. Working with the logfiles, for example gzipping them to save space or running them through Webalizer to analyse our traffic, does not produce the load spikes I expected. In fact, this is one of the most dramatic ways that chips outperforms Thor.

Conclusions:

Well, I've certainly learned a lot during this project and I hope you've enjoyed this article. More importantly, I hope you enjoy the performance of our new servers! Being on dialup myself it's difficult to tell how much better things are performing, but from observing the server load and our traffic graphs, I can see we're doing a lot more traffic with a lot less effort than previously. The general feedback I get from people is also very positive, even when there's a huge number of people using the website.

Many thanks to Plus Corporation for their help putting together our database server. Thanks also to AusGamers and Comindico for continuing to host us!

As I mentioned earlier, this article is very late. It got a little buried under the usual OCAU work and I never got around to finishing it off. I was reminded of it recently and tidied it up enough for publication. What reminded me of it, is that we have a bit of a surprise coming in terms of what will power OCAU in future. That's all I'll say for now... but stay tuned. :)

|

|

Advertisement:

All original content copyright James Rolfe.

All rights reserved. No reproduction allowed without written permission.

Interested in advertising on OCAU? Contact us for info.

|

|