|

|

|

|

|

2009 OCAU Server Upgrade |

|

Join the community - in the OCAU Forums!

|

Dell PowerEdge R610 Info, Conclusion

So, that brings us to the next machine. The Sun Fire X4150 I'd picked up in the forums was a bargain and a fun build, but such opportunities are few and far between. Time was ticking away so I bit the bullet, dug out the credit card and started hunting. After looking around, it seemed Dell's new PowerEdge R610 was the best option for us. I've played with Dell gear before and been impressed both by the quality and the support. Although this machine is taking the role previously taken by our "BBQ" server, in terms of hardware it is two generations on from "beer', which was a PowerEdge 1850. That was superceded by the PowerEdge 1950, then that product line was replaced by the R610.

By the way, if you have decided on a Dell (this is possibly true for other vendors), it's definitely worth ringing the nice Indian man on the telephone and getting him to discount or throw in goodies. We ended up with a server roughly twice the spec, with extras like a DVD kit and drive rails, for a couple of hundred dollars less than the original, lower-specced online quote - not bad for a 10 minute phone call.

Anyway, before long a large box arrived.

There's less to talk about with this machine of course because, unlike the Sun, I basically didn't do any real hardware configuration. I ordered what we needed and it arrived pre-assembled. But I'll give you a tour of the machine because it's interesting to see how two different manufacturers solve the same issues. First up, this machine has two Intel Xeon E5506 CPUs, each 2.13GHz with 4MB cache. These are not top-flight CPUs, but again, with 8 cores on tap that is plenty of multi-user grunt. It's worth noting that these are Nehalem-based Xeons. The Sun uses the previous generation, Core 2 Quad, whereas these are Core i7. Check out our earlier Nehalem article for info on the new platform.

What's the real-world difference? Despite only 133MHz difference in CPU speed, when I had test installs of the forums running individually on each of our new servers side by side, I found the Dell machine consistently 30% to 100% faster (taking half as long) to complete search results. And don't think the Sun machine is slow! The Dell is just brutally fast. Historically running an IP address search was a good way to bring our forums to their knees - there's a sticky thread in the Admin Discussion forum asking admins NOT to do IP searches during peak times. Nowadays we can run multiple IP address searches at the same time if needed, and it has no noticeable effect on forum speed.

The Dell has a neat front-panel diagnostic/config screen, as well as a DVD drive and as mentioned earlier four 15k-rpm 73GB SAS drives. We moved two of these drives over to the Sun to be the system volume there, and put four 36GB 15k drives into the Dell for the database RAID10 array.

Note the Dell only has 6 drive bays compared to the Sun's 8. Also note the annoying blank drive bay filler instead of a useful caddy - never mind, picked up a couple on eBay.

There's a quite handy locking handle for removing the top panel, a definite improvement over the one I'm used to from the PowerEdge 1850 days. Many times I've had to basically use my bodyweight and the heels of my hands to get the panel open. Not so on the R610, and under the lid you find all kinds of useful service information. I've made that picture large so you can check it out if you're keen.

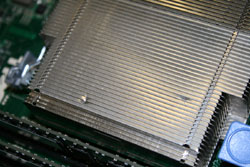

Anyway, with the lid off the innards are exposed, and it's interesting to compare them with the Sun box - that picture is here if you're doing a side-by-side comparison. At far left bottom of the picture we have the two redundant hot-swappable 717W PSUs, above that the PCIe expansion slots with risers and various system connectors. The card placed oddly in the centre with the black cable coming out of it is the PERC 6/I RAID controller - that cable goes to the front drive cage. Smack in the middle are the heatsinks for the two CPUs and on either side the memory slots, fully populated in our case. Still heading to the right of the picture we see the array of 12 system fans which draw air through the machine from front to back for cooling, then at far right are the drive cages and the electronics for the front panel connectors.

The memory is DDR3 as required by Nehalem, and in our case there's a 2GB stick in each of the 12 slots, giving of course 24GB in total. 24GB might seem like an unusual amount, but remember that Nehalem has three memory channels. So to replace BBQ, which this machine largely does, which had 16GB, we have to have 24GB to get the full benefit of those three channels. 16GB doesn't divide into three, obviously. 24GB gives us some room to grow, remembering of course the original requirement for this machine to last us at least 3 years without upgrades, and to handle a lot more traffic than the machine it replaces. The RAID card shown above right isn't too exciting to talk about, although of course it's important. It has 256MB of cache onboard and, although it looks oddly positioned in the machine, it sits in a normal PCI-Express X8 slot.

An impressive array of fans keep the machine cool. The heatsinks are smaller than those in the Sun machine, and one came pre-dented by Dell which isn't exactly confidence-inspiring, but they seem to do the job just fine. In fact, one very notable thing about the Dell is that it is silent when idling. I don't mean quiet, I mean, I couldn't hear it over my desktop machine unless I was really stressing it. This was a revelation to me. ALL previous enterprise-class hardware I've used has been seriously unpleasant to even be in the same room as for any length of time. Lots of fans taking themselves very seriously make for an irritating tearing white noise sound which drives you mental in no time at all. Not so with the latest generation Dells it seems - it was a pleasure to configure this machine even only a few feet away.

The dual redundant hot-swappable PSUs are rated to 717W each, and tucked away in a corner of the system board is an internal USB connector, as also seen on the Sun - although the Sun has two. Again, this is a neat little way to give yourself a last-chance backup, or to keep your USB dongles safe from being snapped off or lost in the rack.

A small pile of documentation arrived with the server, covering the machine itself, the optional RAID card and including free trials of virtualisation software etc. In case you're wondering, we are not using any virtualisation with either of our servers although they both support it. Why? Well, largely because I have zero experience with virtualisation and am therefore uncomfortable moving the production servers over to it. Also, from my limited understanding, a "bare metal" config is better for us because our primary concern is performance, and although reliability is of course something we also need, we get that via the somewhat oldschool method of having physical redundancy. I'm not going to get into a holy war with virtualisation evangelists though, I'm sure there would be some benefits to it, and it's something we'll look at in future.

Also of course drive rails were included. On the Dell these are completely tool-less, just snapping into place on the machine and in the rack. It took about four times as long to install the Sun thanks to an abundance of screws and bolts. Drive rails are important because they let you slide the machine out of the rack for maintenance. On the Sun for example the internal fans are hot-swappable, so if one fails, you can slide the machine out (being careful not to yank cables out of the back - "cable arms" are designed to solve this issue), pop the fan cover, pull out the faulty fan module identified by a fault LED, plug in the replacement part, slide the server back into the rack and nobody will have experienced an outage.

Finally, the Dell has a nice metal faceplate which provides physical security and stops people from either powering it off via the power switch or pulling hard drives out of the front. However the rack we're in is secured and having the faceplace on just makes it more difficult for a technician to get things done if you're talking to them over the phone when there's a problem. So the faceplate stays at home.

On the back of the server from left to right we have the PCI-Express back panels, diagnostic/monitoring ports, a VGA port, two USB ports, four Gigabit Ethernet ports, a diagnostic LED, "identify" LED and button and the two hot-swappable PSUs. The "identify" button and LED are simply so you can find the specific machine you want in the rack. Imagine you have a room full of racks each of which have 48 identical servers in them. Firstly you can turn on the LED via remote management, which illuminates a bright blue LED at the front and a bright white LED at the rear of the machine. Also, you can press the "identify" button on the front or the rear which lights up both ends again, so when you walk around the rack or down the hallway full of racks to get to the other side, you can once again locate the correct server easily. Hitting the button again turns the lights off. This is common to virtually all servers going back several generations now. The fault LED generally just lights up or flashes a pattern to indicate a fault has occured somewhere in the machine - the Dell has a diagnostic panel on the front for more info.

Configuration Info:

After doing some testing and general configuration, I had to lock down how we were going to spread the load across the two servers. Two things became obvious early on. Firstly, the Dell is noticeably quicker in terms of CPU grunt and memory speed. Secondly, the RAID setup on the Sun is a lot quicker than the Dell. Fortunately that fit pretty much perfectly with my plans. The Sun took over the master database load, aided by its extra RAM and fast RAID. But surely the faster CPUs and memory of the Dell would be better for database work, you ask? The answer is that vBulletin, the forum software we use, is aware of the master-slave relationship and sends lots of queries, including those intensive searches, to the slave. So the Dell in fact does quite a lot of the CPU and RAM intensive SQL work, while the Sun is responsible for accepting and storing submitted data, which is then mirrored to the Dell in the background.

The web load is again split between the two machines. The main OCAU website runs on the Sun box. It's mostly static and not a lot of load so could really go on either machine. Having it all on the Sun box with the master databases means I can basically consider OCAU as being all on that one machine from a backups and maintenance perspective, with the forum load and slave databases on the other machine, not really needing to be managed on any kind of regular basis. The forums web load is generally all coming from the Dell machine, but during times of heavy load I can use some simple DNS trickery to silently shift people over to the Sun box as needed, as it is already configured and ready to serve forum clients.

One other nice aspect of this setup is that we can, should we ever need to, expand capacity by throwing in another machine configured as another database slave with the forum software installed and it can pretty much instantly start sharing the forum workload and communicating with the database master.

The End Result:

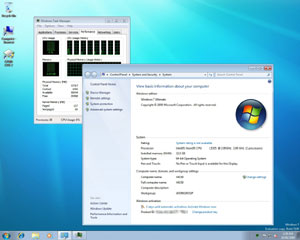

Now, I know you're going to be disappointed, but unfortunately I don't have a huge swag of benchmarks to show off these new machines. Due to time constraints my focus was on testing the machines, configuring them and getting them on-site. I did run some stress tests of course but nothing that can really be assembled into a useful set of graphs or anything. Here's a mostly unexciting screenshot of the Sun machine running under Windows 7, though:

But in terms of real-world performance, remember I said that the machines had to last 3 years and during that time we hoped the two machines together would be able to handle 2000 forum users? A week after moving OCAU over to these servers, a forum thread was linked on the popular site Slashdot. At 5:20am we had 2076 people browsing the forums. At the time, I had 100% of the forum webserver load going to the new Dell machine, with of course the main database load on the Sun, and slave database work also on the Dell. Historically when we've been linked by Slashdot, the site has fallen over within minutes. This time, the servers basically laughed it off. Neither server was being pushed in any real way, in terms of memory usage, CPU utilisation or disk access. People online at the time had no idea we were being hammered with over twice as many visitors as our normal peak. I call that a resounding success.

Of course, a final note: much kudos to Internode for providing hosting for these servers!

|

|

Advertisement:

All original content copyright James Rolfe.

All rights reserved. No reproduction allowed without written permission.

Interested in advertising on OCAU? Contact us for info.

|

|